Reinforcement Learning: An Introduction (Adaptive Computation and Machine Learning series)

- 作者:Sutton, Richard S.,Barto, Andrew G.

- 発売日: 2018/11/13

- メディア: ハードカバー

Chapter 9

Exercise 9.1

Define a feature as a one-hot representation of states

, that is,

.

Then, .

Exercise 9.2

There are choices of

(

) for

.

Exercise 9.3

. Hence there are

features.

Exercise 9.4

Use rectangular tiles rather than square ones.

Chapter 10

Exercise 10.1

In this problem, sophisticated policies are required to reach the terminal state. Hence, an episode never end with an (arbitrarily chosen) initial policy.

Exercise 10.2

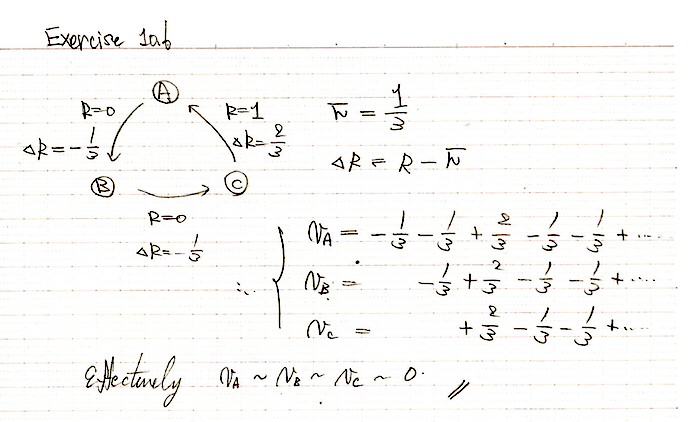

Exercise 10.3

Longer episodes are less reliable to estimate the true value.

Exercise 10.4

In "Differential semi-gradient Sarsa" on p.251, replace the definition of with the following one: