Note: I have two problems in my setup. (2013/06/06)

metadata-agent doesn't work well in my setup. This looks the same problem with this.Resolved with Bug 972239 - root.conf.helper should be replaced by get_root_helper.dnsmasq for the second tenant's private network fails to boot depending on the timing. Need to restart dhcp-agent several times to boot.This was due to the lack of ovs_use_veth setting reported in RDO Forum.

I will describe the steps to build the "all-in-one" openstack demonstration environment using RDO (Grizzly). The installation is done with "packstack --allinone" command.

Note: Because RDO/Grizzly is under the rapid updates, some steps may have changed when you try it. This documentation is based on the following packages as of 2013/06/06.

OS: RHEL6.4 + latest updates

RDO: rdo-release-grizzly-3.noarch# rpm -qa | grep openstack openstack-nova-common-2013.1.1-3.el6.noarch openstack-dashboard-2013.1.1-1.el6.noarch openstack-swift-object-1.8.0-2.el6.noarch kernel-2.6.32-358.6.2.openstack.el6.x86_64 openstack-glance-2013.1-2.el6.noarch openstack-nova-console-2013.1.1-3.el6.noarch python-django-openstack-auth-1.0.7-1.el6.noarch openstack-packstack-2013.1.1-0.8.dev601.el6.noarch openstack-cinder-2013.1.1-1.el6.noarch openstack-nova-conductor-2013.1.1-3.el6.noarch openstack-swift-plugin-swift3-1.0.0-0.20120711git.el6.noarch openstack-swift-account-1.8.0-2.el6.noarch openstack-keystone-2013.1-1.el6.noarch openstack-nova-novncproxy-0.4-7.el6.noarch openstack-nova-compute-2013.1.1-3.el6.noarch openstack-quantum-2013.1-3.el6.noarch openstack-swift-1.8.0-2.el6.noarch openstack-utils-2013.1-8.el6.noarch openstack-nova-api-2013.1.1-3.el6.noarch openstack-swift-proxy-1.8.0-2.el6.noarch kernel-firmware-2.6.32-358.6.2.openstack.el6.noarch openstack-nova-scheduler-2013.1.1-3.el6.noarch openstack-nova-cert-2013.1.1-3.el6.noarch openstack-quantum-openvswitch-2013.1-3.el6.noarch openstack-swift-container-1.8.0-2.el6.noarchYou may notice the exotic kernel "kernel-2.6.32-358.6.2.openstack.el6.x86_64". This is netns-enabled kernel for RHEL6.4. By replacing the standard RHEL6.4 kernel with it, you can use the "network namespace" feature of Quantum.

Network Configuration

Virtual network is configured with Quantum OVS plugin. This is now the default of "packstack --allinone" configuration.

The physical network configuration is as below:

------------------------------- Public Network

| | (gw:10.208.81.254)

| |

|eth0 |eth1 10.208.81.123/24

---------------------------

| | |

| [br-ex] |

| ^ |

| | Routing |

| v |

| [br-int] |

| |

---------------------------Two physical NICs (eth0, eth1) are connected to the same public network. eth1(IP:10.208.81.123) is used for an external access to the host Linux. eth0(without IP) is connected the OVS switch(br-ex) which corresponds to the external virtual network. The other OVS switch(br-int) is an integration switch corresponding to the private virtual networks. The host Linux takes care of the routing between these OVS switches which corresponds to the virtual router's function.

The following is the simplest virtual network with a single tenant. You can add more private networks and routers if you like.

------------------------------- Public Network

| | (gw:10.208.81.254)

| |

|eth0 |eth1 10.208.81.123/24

---------------------------

| | |

| --------------- External network (ext-network)

| | |

| [rh01-router] |

| | |

| --------------- Private network (rh01-private)

| | | |

| [vm01] [vm02] |

--------------------------- In addition to the host Linux IP address, you need a range of public IPs for floating IPs and routers' external IPs. I used the range 10.208.81.128/27 in my setup.

Now let's start the deployment!

Installing RHEL6.4

Install RHEL6.4 with "Basic server" option and apply the latest updates. I recommend you to create a volume group "cinder-volumes" using a dedicated disk partition. In my setup, I used /dev/sda5 for it.

# pvcreate /dev/sda5 # vgcreate cinder-volumes /dev/sda5

If you don't create the volume group in advance, packstack creates an image file /var/lib/cinder/cinder-volumes and uses it as a pseudo-physical volume.

Network interfaces are configured as below corresponding to the drawing of physical network configuration.

etc/sysconfig/network-scripts/ifcfg-eth0

DEVICE=eth0 TYPE=Ethernet ONBOOT=yes NM_CONTROLLED=no BOOTPROTO=none

/etc/sysconfig/network-scripts/ifcfg-eth1

DEVICE=eth1 TYPE=Ethernet ONBOOT=yes NM_CONTROLLED=no BOOTPROTO=static IPADDR=10.208.81.123 NETMASK=255.255.255.0 GATEWAY=10.208.81.254

/etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.208.81.123 rdodemo01

Unfortunately, it seems SELinux needs to be permissive. I hope the SELinux policy for RDO will be available in the future.

# setenforce 0

/etc/selinux/config

SELINUX=permissive SELINUXTYPE=targeted

You need to set the following kernel parameters so that security group packet filtering works on the bridge.

/etc/sysctl.conf

net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.bridge.bridge-nf-call-arptables = 1

And bridge module should be loaded at the boot time. This is done with the following module initialization script.

/etc/sysconfig/modules/openstack-quantum-linuxbridge.modules

#!/bin/sh modprobe -b bridge >/dev/null 2>&1 exit 0

# chmod u+x openstack-quantum-linuxbridge.modules

Installing RDO with packstack

Just issue the following three commands :) It takes around 30minutes to finish the packstack installation.

# yum install -y http://rdo.fedorapeople.org/openstack/openstack-grizzly/rdo-release-grizzly-3.noarch.rpm # yum install -y openstack-packstack # packstack --allinone Welcome to Installer setup utility Packstack changed given value to required value /root/.ssh/id_rsa.pub Installing: Clean Up... [ DONE ] Setting up ssh keys...root@10.208.81.123's password: <--- Enter root password ....

If you didn't created volume group "cinder-volumes" in advance, the following line is added to /etc/rc.local.

losetup /dev/loop0 /var/lib/cinder/cinder-volumes

But in my setup, I had to modify it as below.

losetup /dev/loop1 /var/lib/cinder/cinder-volumes vgchange -ay

I reported it in the RDO forum.

You'd better disable the libvirt's default network as you don't need it.

# virsh net-destroy default # virsh net-autostart default --disable

And you need to attach the physical interface(eth0) to the OVS switch(br-ex) with the following command.

# ovs-vsctl add-port br-ex eth0

# ovs-vsctl show

394e8a6b-a5e0-4fef-8f8f-506889aebfc1

Bridge br-int

Port br-int

Interface br-int

type: internal

Bridge br-ex

Port br-ex

Interface br-ex

type: internal

Port "eth0"

Interface "eth0"

ovs_version: "1.10.0"Apply the following patch as described in RDO Forum.

Add the following settings as described in RDO Forum

- This is filed in RH BZ 969975 (RH internal entry.)

# openstack-config --set /etc/quantum/quantum.conf DEFAULT ovs_use_veth True

Also, I applied the following patches. I filed them in RH BZ.

# diff -u /usr/lib/python2.6/site-packages/quantum/agent/linux/interface.py{.orig,} --- /usr/lib/python2.6/site-packages/quantum/agent/linux/interface.py.orig 2013-06-06 17:20:03.928751907 +0900 +++ /usr/lib/python2.6/site-packages/quantum/agent/linux/interface.py 2013-06-08 00:25:35.919838475 +0900 @@ -291,11 +293,11 @@ def _set_device_plugin_tag(self, network_id, device_name, namespace=None): plugin_tag = self._get_flavor_by_network_id(network_id) - device = ip_lib.IPDevice(device_name, self.conf.root_helper, namespace) + device = ip_lib.IPDevice(device_name, self.root_helper, namespace) device.link.set_alias(plugin_tag) def _get_device_plugin_tag(self, device_name, namespace=None): - device = ip_lib.IPDevice(device_name, self.conf.root_helper, namespace) + device = ip_lib.IPDevice(device_name, self.root_helper, namespace) return device.link.alias def get_device_name(self, port): # diff -u /usr/lib/python2.6/site-packages/quantum/agent/dhcp_agent.py{.orig,} --- /usr/lib/python2.6/site-packages/quantum/agent/dhcp_agent.py.orig 2013-06-07 23:57:42.259839168 +0900 +++ /usr/lib/python2.6/site-packages/quantum/agent/dhcp_agent.py 2013-06-07 23:58:13.121839155 +0900 @@ -324,7 +324,7 @@ pm = external_process.ProcessManager( self.conf, network.id, - self.conf.root_helper, + self.root_helper, self._ns_name(network)) pm.enable(callback) @@ -332,7 +332,7 @@ pm = external_process.ProcessManager( self.conf, network.id, - self.conf.root_helper, + self.root_helper, self._ns_name(network)) pm.disable() # diff -u /usr/lib/python2.6/site-packages/quantum/agent/l3_agent.py{.orig,} --- /usr/lib/python2.6/site-packages/quantum/agent/l3_agent.py.orig 2013-06-07 23:59:25.365839124 +0900 +++ /usr/lib/python2.6/site-packages/quantum/agent/l3_agent.py 2013-06-07 23:59:36.360839119 +0900 @@ -649,12 +649,12 @@ 'via', route['nexthop']] #TODO(nati) move this code to iplib if self.conf.use_namespaces: - ip_wrapper = ip_lib.IPWrapper(self.conf.root_helper, + ip_wrapper = ip_lib.IPWrapper(self.root_helper, namespace=ri.ns_name()) ip_wrapper.netns.execute(cmd, check_exit_code=False) else: utils.execute(cmd, check_exit_code=False, - root_helper=self.conf.root_helper) + root_helper=self.root_helper) def routes_updated(self, ri): new_routes = ri.router['routes']

Finally, you need to reboot the server at this point as the new kernel (netns-enabled) is installed during this process.

# reboot

Creating tenants and users

Now you can access the Horizon dashboard though the URL "http://

# cat keystonerc_admin | grep PASSWORD export OS_PASSWORD=39826ef5fd4641ff

You can change the password through the dashboard or using CLI as below.

# . keystonerc_admin # keystone user-password-update --pass <new password> admin

Once you changed the password, you need to update the "keystonerc_admin" and re-source it.

Now I will create the following tenants and users for the demonstration purpose.

| user | tenant | role | |

|---|---|---|---|

| demo01 | redhat01 | Member | |

| demo02 | redhat02 | Member | |

| demo00 | redhat01,redhat02 | admin |

Though you can use the dashboard for that, I will use CLI here simply because it's too tedious to describe GUI steps...

# . keystonerc_admin # keystone tenant-create --name redhat01 # keystone tenant-create --name redhat02 # keystone user-create --name demo00 --pass xxxxxxxx # keystone user-create --name demo01 --pass xxxxxxxx # keystone user-create --name demo02 --pass xxxxxxxx # keystone user-role-add --user demo00 --role admin --tenant redhat01 # keystone user-role-add --user demo00 --role admin --tenant redhat02 # keystone user-role-add --user demo01 --role Member --tenant redhat01 # keystone user-role-add --user demo02 --role Member --tenant redhat02

Creating virtual networks

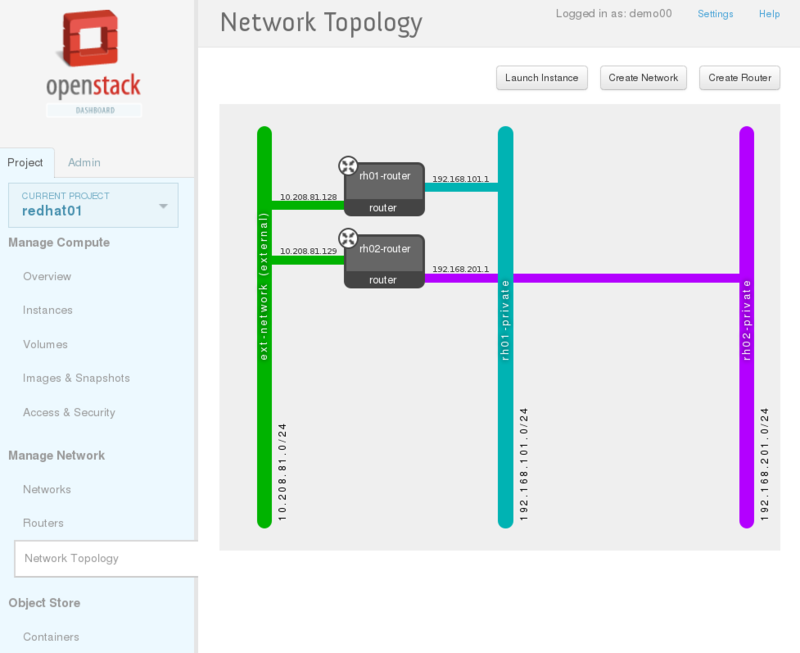

I will assign a set of private router and private network for each tenant(redhat01, redhat02).

Define external network.

# tenant=$(keystone tenant-list | awk '/service/ {print $2}')

# quantum net-create --tenant-id $tenant ext-network --shared --provider:network_type local --router:external=True

# quantum subnet-create --tenant-id $tenant --gateway 10.208.81.254 --disable-dhcp --allocation-pool start=10.208.81.128,end=10.208.81.159 ext-network 10.208.81.0/24Create router and private network for redhat01.

# tenant=$(keystone tenant-list|awk '/redhat01/ {print $2}')

# quantum router-create --tenant-id $tenant rh01-router

# quantum router-gateway-set rh01-router ext-network

# quantum net-create --tenant-id $tenant rh01-private --provider:network_type local

# quantum subnet-create --tenant-id $tenant --name rh01-private-subnet --dns-nameserver 8.8.8.8 rh01-private 192.168.101.0/24

# quantum router-interface-add rh01-router rh01-private-subnetCreate router and private network for redhat02.

# tenant=$(keystone tenant-list|awk '/redhat02/ {print $2}')

# quantum router-create --tenant-id $tenant rh02-router

# quantum router-gateway-set rh02-router ext-network

# quantum net-create --tenant-id $tenant rh02-private --provider:network_type local

# quantum subnet-create --tenant-id $tenant --name rh02-private-subnet --dns-nameserver 8.8.8.8 rh02-private 192.168.201.0/24

# quantum router-interface-add rh02-router rh02-private-subnetYou can check the network topology from the dashboard. When you login as demo00, you can see the both private networks. On the other hand, when you login as demo01, you see one private network assigned to the tenant redhat01.

Running an instance

Now you can follow the steps in RDO's website: Running an Instance

First as demo00, you would add Fedora18 image as a public one. Then you can launch it as demo01 or demo02.

By the way, you need to set "NOZEROCONF=yes" in /etc/sysconig/network of the instance image so that it can access the meta-data server, but the Fedora18 image mentioned in the guide above doesn't have this setting. So you'd better create a snopshot image with this setting. First, launch an instance with the Fedora18 image and modify /etc/sysconig/network. Then you can create a snopshot of this instance.

I reported this in RDO Forum.

Enjoy!